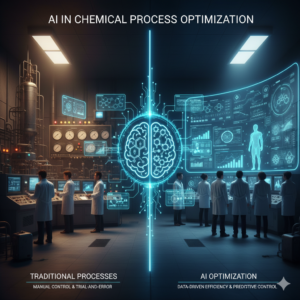

Understanding Large Language Models: How They Actually Work

Understanding Large Language Models: How They Actually Work

Large Language Models (LLMs) have transformed the way we interact with technology, powering tools for writing, customer support, coding, and research. While they may appear intelligent, their functionality is based on patterns, probabilities, and vast amounts of training data rather than human-like understanding. Knowing how LLMs work can help users make the most of them while recognizing their limits.

What is a Large Language Model?

A Large Language Model is an AI system trained to predict and generate text based on patterns it has learned from massive datasets. Instead of memorizing facts, it recognizes relationships between words, sentences, and concepts. Its main function is predicting what comes next in a sequence, which allows it to produce coherent and contextually appropriate responses across a wide variety of tasks.

Tokens: The Building Blocks of Language

LLMs process text by breaking it into smaller units called tokens. Tokens can be whole words, subwords, or punctuation marks. These tokens are converted into numerical representations, enabling the model to perform complex calculations to predict sequences of text. By analyzing the probabilities of different token combinations, the model generates responses one piece at a time.

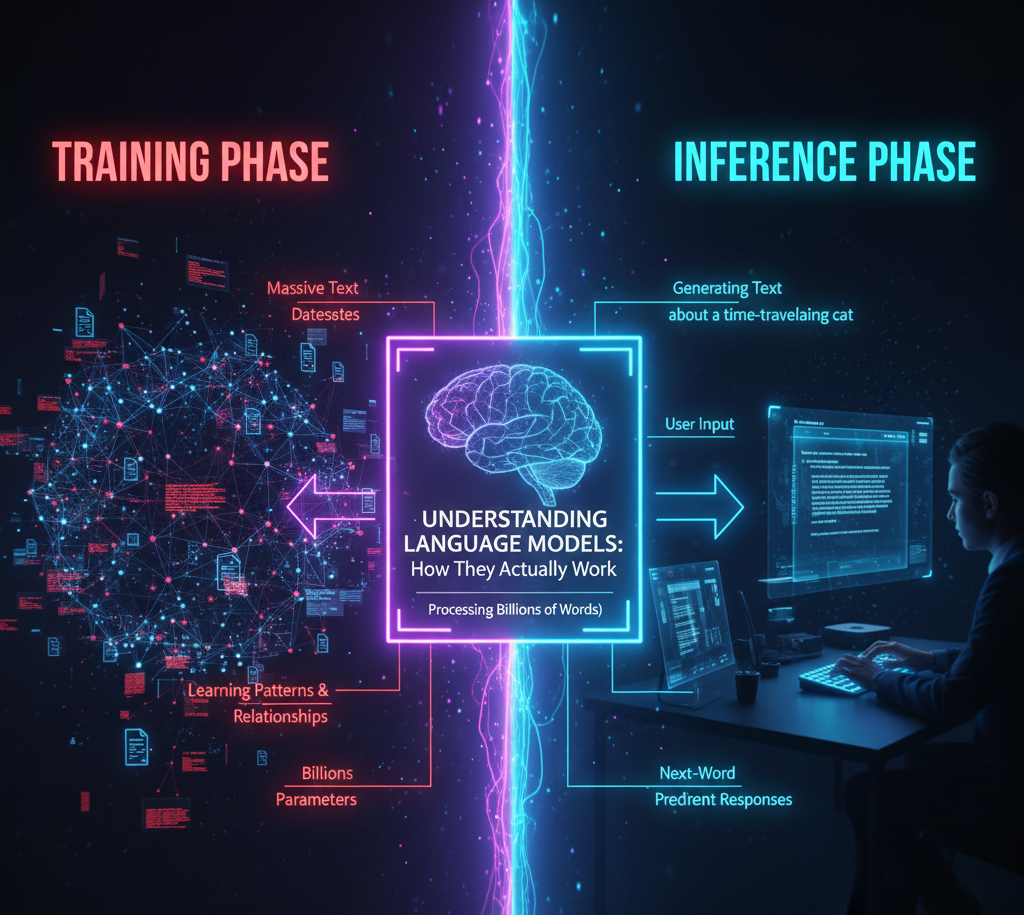

How LLMs Are Trained

Training involves feeding the model billions or even trillions of tokens from books, articles, websites, and other sources. During this process, the model repeatedly predicts the next token in a sequence and adjusts its internal parameters to reduce error. Over time, it learns patterns in grammar, writing style, factual associations, and logical structures. This statistical learning is what allows the model to perform tasks that seem intelligent, even though it doesn’t truly understand meaning.

The Transformer Architecture and Attention Mechanisms

Modern LLMs are built using Transformer architecture, which introduced the attention mechanism. Attention allows the model to focus on relevant parts of the input text rather than processing all words equally. This capability enables the model to understand long-range dependencies, maintain context, and produce coherent outputs even for complex or multi-step tasks.

Parameters and Model Size

Parameters are the numerical values inside the model that determine how it processes information. Large models, with billions of parameters, can recognize more complex patterns and maintain more nuanced context. Smaller models are faster and less resource-intensive but may produce simpler or less accurate outputs. The number of parameters directly influences a model’s ability to handle complex tasks and generate reliable text.

Generating Text Responses

When given a prompt, an LLM converts it into tokens, applies attention mechanisms to understand context, predicts the most probable next token, and repeats this process until the response is complete. The output is generated dynamically and is not retrieved from memory. This is why the model can produce varied responses to the same prompt and why accuracy may fluctuate depending on context and complexity.

Fine-Tuning and Human Feedback

LLMs can be fine-tuned for specific tasks or industries. Instruction tuning teaches the model to follow user directions more accurately, while reinforcement learning from human feedback (RLHF) helps align the outputs with human expectations. Fine-tuning allows the model to perform specialized functions, such as drafting emails, assisting with coding, or providing customer support.

Limitations of LLMs

Despite their capabilities, LLMs are not conscious or capable of true understanding. They can generate incorrect information, misinterpret context, or produce outputs that appear plausible but are false. Their knowledge is also limited to the data they were trained on, and they do not access real-time information unless integrated with external sources.

Real-World Applications

LLMs are widely used in industries such as healthcare, finance, education, and customer service. They assist with content creation, language translation, summarization, research, and automated support. Their adaptability allows businesses and individuals to save time and increase productivity, but careful oversight is required to ensure accuracy and ethical use.

The Future of LLMs

Future developments in LLMs include multimodal models capable of understanding text, images, and audio together, improved reasoning abilities, and integration with external knowledge databases. While AI will continue to advance, human expertise remains essential for interpretation, validation, and decision-making.

Large Language Models are powerful tools that transform how we interact with information, but their strength lies in augmenting human capabilities rather than replacing them entirely. By understanding their inner workings, users can harness their potential safely and effectively.

The way that humans engage with technology has been completely revolutionized by the advent of large language models (LLMs), which are now the driving force behind tools for coding, research, customer service, and writing. Although they may seem to possess intelligence, their capabilities are founded on patterns, probabilities, and an extensive quantity of training data rather than a comprehension that is comparable to that of a human being. Users may make the best possible use of large language models (LLMs) by being aware of their limitations and understanding how they function.

What does the term “Large Language Model” mean?

A large language model is an artificial intelligence system that has been taught to anticipate and produce text by making use of the patterns it has acquired from extensive datasets. Rather of just committing information to memory, it is able to identify connections that exist between words, phrases, and ideas. Its primary purpose is to forecast what will occur next in a series of events, which enables it to provide answers that are consistent with the context and relevant to the situation in a broad range of applications.

Tokens: The Fundamental Elements That Make Up Language

Tokens are tiny bits of text that language models (LLMs) break down while processing text. Tokens might consist of whole words, parts of words, or punctuation marks. The model is able to undertake sophisticated computations in order to anticipate sequences of text since these tokens are translated into numerical representations. The model produces replies to the input in pieces, as it examines the probability of various token combinations.

The Training Process of Large Language Models

Training entails providing the model with billions or perhaps trillions of tokens from a variety of sources, including webpages, papers, and books. While it is going through this process, the model continually makes predictions about what the next token in a sequence will be and modifies the parameters that are internal in order to minimize the amount of error that is there. It gradually comes to recognize patterns in grammatical structure, literary style, correlations between facts, and logical frameworks as it continues to progress. It is because of this statistical learning that the model is able to carry out tasks that seem to be intelligent, despite the fact that it does not really have an understanding of what meaning is.

The attention mechanisms and the transformer architecture

The attention mechanism was established by the Transformer architecture, which is the foundation of contemporary large language models (LLMs). Rather than analyzing all of the words in the input text with equal attention, the model is able to concentrate its attention on the sections of the text that are significant. The model is able to comprehend long-range relationships, preserve context, and provide outputs that are coherent even in the case of complicated or multi-step activities as a result of this capacity.

The Model’s Dimensions and Parameters

The numerical values that are included inside the model and that establish how it goes about processing information are referred to as the parameters. Large models, which include billions of parameters, are capable of recognizing more intricate patterns and preserving more refined context. Smaller models have the ability to process information more quickly and need less resources, but they may also provide outputs that are simpler or less accurate. The model’s capacity to successfully complete complicated tasks and produce dependable text is directly influenced by the amount of parameters.

Creating Automated Replies to Messages

An LLM is capable of converting a prompt into tokens, using attention processes in order to comprehend context, predicting the most likely subsequent token, and continuing this process until the answer is fully formed. The output is not retrieved from memory; rather, it is created in real time. Because of this, the model is able to generate a variety of replies to the same prompt, and the level of accuracy may vary depending on the complexity and context of the situation.

Human Input and the Process of Fine-Tuning

There are ways to fine-tune large language models for certain sectors or activities. Instruction tuning improves the model’s ability to properly follow the user’s instructions, while reinforcement learning from human feedback (RLHF) assists in aligning the outputs with what humans anticipate. The model may be fine-tuned in order to carry out particular tasks, such as generating emails, giving assistance with coding, or offering customer service.

Constraints on large language models (LLMs)

LLMs are not capable of conscious awareness or genuine comprehension, despite their capacities. They have the potential to provide wrong information, misunderstand the context in which they are being used, or give outputs that seem to be true but are really untrue. In addition, unless they are interconnected with other sources, their understanding is restricted to the material on which they were taught, and they are not able to obtain real-time data.

Practical Uses in the Real World

Industries like healthcare, banking, education, and customer service all make extensive use of large language models (LLMs). They provide assistance with the generation of material, the translation of language, summarization, research, and automated support. Businesses and people are able to save time and boost productivity because of their capacity to adapt, but in order to guarantee accuracy and ethical usage, it is necessary to exercise rigorous supervision.

Looking Ahead to Large Language Models

Multimodal models that are capable of comprehending text, visuals, and audio all at the same time, enhanced reasoning capabilities, and interaction with external knowledge databases are some of the improvements that will be included into large language models (LLMs) in the future. Although artificial intelligence will continue to improve, human knowledge is still necessary for the purposes of validation, decision-making, and interpretation.

Although the value of large language models (LLMs) resides in supplementing human talents rather than replacing them fully, these models are strong tools that revolutionize the way that people interact with information. Users are able to fully use their potential in a safe and efficient manner via a comprehension of how they operate on the inside.